[ed] The business of textbooks and educational technology are in a period of disruption and change. Today we present part one of a three part series that takes a quantitative view of this change. This study uses modeling techniques that have proven themselves in numerous other industries. The implications for education are fascinating and challenging.

[ed] The business of textbooks and educational technology are in a period of disruption and change. Today we present part one of a three part series that takes a quantitative view of this change. This study uses modeling techniques that have proven themselves in numerous other industries. The implications for education are fascinating and challenging.

Click here to read the introduction to this series.

Click here to read Part 2 – The Data

In today’s installment Paul Schumann gives an overview of the methodology and uses the Reference Library Market as a case study. We start with this market because we have a relatively complete set of data and it demonstrates the model well. He then turns his attention to Open Source content and how this will affect the market. In later installments we look at markets where the data is sketchier but still conforming to the model.

Tomorrow – Supplemental Materials, Basal Textbooks, Student Devices (Laptops, handhelds), Delivery Platforms (CD-ROM, Internet), and Electronic Media.

By Paul Schumann, Glocal Vantage, Inc.

Introduction

Information technologies diffuse through an industry by improving procedures, processes and products. The diffusion usually begins with incremental changes aimed at improving costs, or more broadly, efficiency. This is like a virus infecting a living cell, the informed or informatized (we don’t have good language to describe the result) is transformed into something new. Informed segments of the economy then multiply their effects on the industry radically changing it or destroying it.

The K12 publishing industry is one of the industries being so affected. Information technologies have found their way into the processes of printing books, their distribution, the way they are sold, and even the way we communicate about the books. Now information technology is altering the very nature of publications, especially in textbooks and supplemental materials used. The information technologies developed to aid social change and societal development have begun to impact the industry, threatening to destroy it.

This series of blog entries summarizes the meta research done on the industry highlighting data that indicates the nature and rate of substitution of information technologies for print. There are two overall conclusions from this study. First, that there are indications of the substitution going on in a number of areas. And, second, that we lack a coherent set of data on the industry that would enable us to make firm predictions.

[Ed note: The Association of Education Publishers AEP is undertaking a study that may help with this – no link yet.]

Substitution Analysis

For 36 years Substitution analysis has been a well accepted method of technological forecasting. In these analyses, the Fisher-Pry model was used. The Fisher-Pry model predicts characteristics loosely analogous to those of biological system growth. It results in a S-curve (sigmoidal curve) familiar to many. It is shaped like an escalator. These natural growth processes share the properties of relatively slow early change, followed by steep growth, then a leveling off as size asymptotically approaches a limit.

For 36 years Substitution analysis has been a well accepted method of technological forecasting. In these analyses, the Fisher-Pry model was used. The Fisher-Pry model predicts characteristics loosely analogous to those of biological system growth. It results in a S-curve (sigmoidal curve) familiar to many. It is shaped like an escalator. These natural growth processes share the properties of relatively slow early change, followed by steep growth, then a leveling off as size asymptotically approaches a limit.

Reference Library Case Study

We start with a look at the substitution of electronic media for print media in the reference library. The surrogate data that we have is that provided by Association of Research Libraries. The data that ARL provides is a measure of expenditures. Sales figures are quite often used as they provide an aggregate way of indicating the impact of the new technology on the market.

The Fisher-Pry substitution model is often used to analyze a substitution like electronic for print media in the reference library. The relationship between the fraction of total market taken by the new technology, f, is often given as:

f = 1 /(1 + c exp(-bt))

where t is time, and c and b are empirically determined coefficients. In this case b and c were determined from the data provided by Association of Reference Libraries for the years 1992 to 2004.

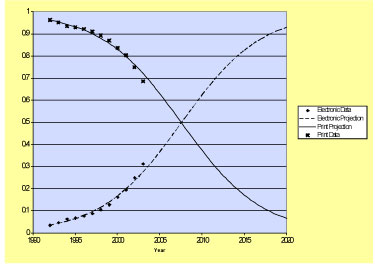

When these data are analyzed utilizing the Fisher-Pry method, the graph shown in Figure 1 results. It clearly indicates that the substitution of electronic for print is well underway in reference materials. The crossover point will occur in 2008 and 90% substitution will be achieved ten years later.

Figure 1. Data: http://www.arl.org

Taking 1990 as the beginning of the substitution, and the middle projection, the time to 90% substitution by electronic media will take 28 years.

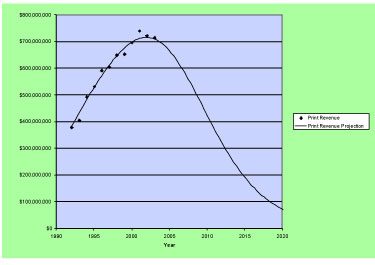

One of the interesting, and most insidious aspects of this type of substitution, when the substitution is taking place in a growing market, is that a large percentage of the substitution has taken place before the old technology sees two successive years of decreased revenue. This is the case here as well. Fifty percent of the total time to 90% substitution has elapsed before the print media have experienced two years decline, as shown in Figure 2.

Figure 2 Print Revenue Projections During Transition.

Free Open Source Content – A Special Challenge

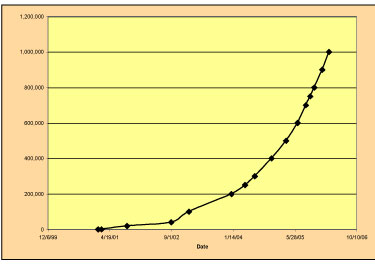

There is an additional substitution going on and that is collaborative user generated content for traditional publishing. The reference industry is a pioneer in this substitution in Wikipedia [https://en.wikipedia.org/wiki/Main_Page]. This is a substitution within the electronic reference resources, and unfortunately we have no data to indicate how this substitution is progressing. Revenue is not a good surrogate for this type of substitution as the results of the Wikipedia effort are available for free. The only possible measure would be the number of accesses or amount of time that people use Wikipedia versus other traditional reference resources. Wikipedia is certainly growing fast (Figure 3), in spite of professional criticism of the quality of the effort. Figure three indicates the growth in the number of English articles. The number of English articles is projected to be:

2007: 1.7M

2008: 4M

2009: 8.5M

2010: 18M

Figure 3. Wikipedia Articles in English – Source: Wikipedia

The transformation of the reference library is not complete. There are many factors, trends and driving forces that could affect the future of the reference library. I think that the two most important trends affecting the future of the reference library, and by association, the reference publishing industry are: search engines vs. indexed collections, and proprietary vs. open content creation.

Search engines select information to be delivered to you based on your keywords matching them to the content of documents it searches, based on the algorithm of the search engine. It does not deliver the information that is “best” for the purpose of the researcher, as a reference librarian would, nor does it verify its authority, as indexed and abstracted peer reviewed articles/books/reports does. Most search engines will deliver documents that are current, are used frequently and are linked to my other documents (a type of authority measure). What search engines provide is quick, cheap access to over a billion web sites in the world. Given the high costs of the traditional system, and the rapid improvement of search engines, I see search engines providing a lot of the services now fulfilled by reference librarians, and the reference publishing industry.

[Ed note: I wrote about this trend in the articles “Living in a World of Infinite Input” and “21st Century Skills – The Foundation Skill”. I believe the role of the reference librarian will evolve but will be even more critical as information expands exponentially.]

Other Articles in this Series

The Education Business Blog

The Education Business Blog